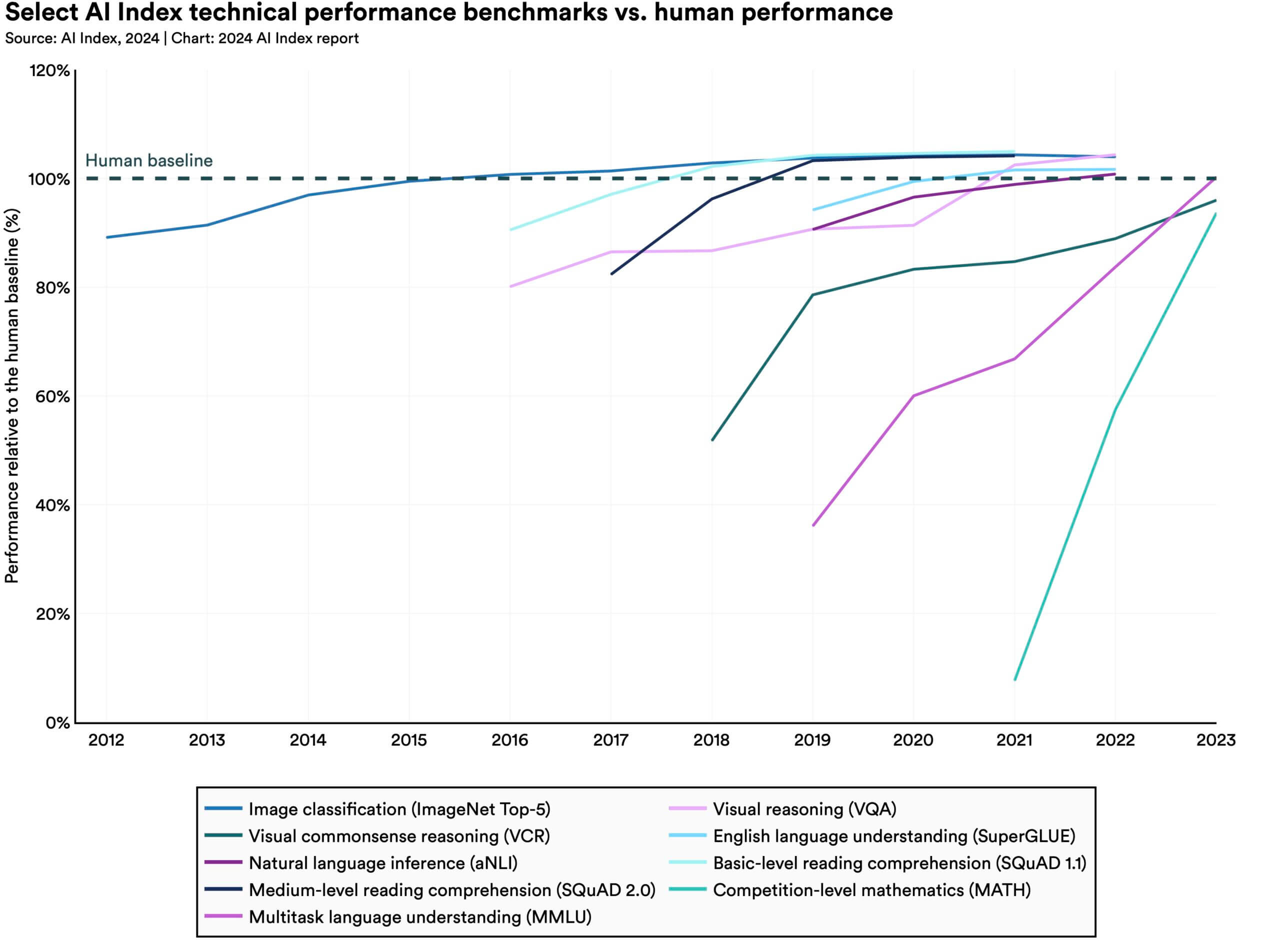

The latest Stanford report reveals AI is now outperforming humans across most benchmarks – but that’s not the biggest story here. What concerns us most is that we’re running out of meaningful ways to test AI capabilities.

Our current benchmarks are becoming obsolete faster than we can create new ones. When AI systems surpass our testing frameworks, we lose visibility into their true capabilities and limitations. This creates a serious blind spot for security and safety.

This isn’t just about AI getting smarter – it’s about the pace of advancement outstripping our ability to measure and understand it. For those of us working in AI safety, this creates a crucial challenge: How do we secure systems that are evolving faster than our testing frameworks?

The trajectory of AI advancement continues to steepen, with systems exhibiting compounding improvements in both speed and capability.

Source: Reddit